在網路上可以找到大量使用 kubeadm 等類似工具建置 Kubernetes Cluster 的教學,雖然使用這類工具可以快速建立一個 Kubernetes Cluster ,甚至連 HA 也考量進去了。但以手動方式建置好處莫過於可以了解每個元件之間的關係與建置過程可能會遇到的問題以及從中學習排除問題的經驗。因此筆者就開始了這條不歸路….本篇主要紀錄建置一個 HA Kubernetes Cluster 的過程,從 Github 下載 Source Code 開始到建置完成。

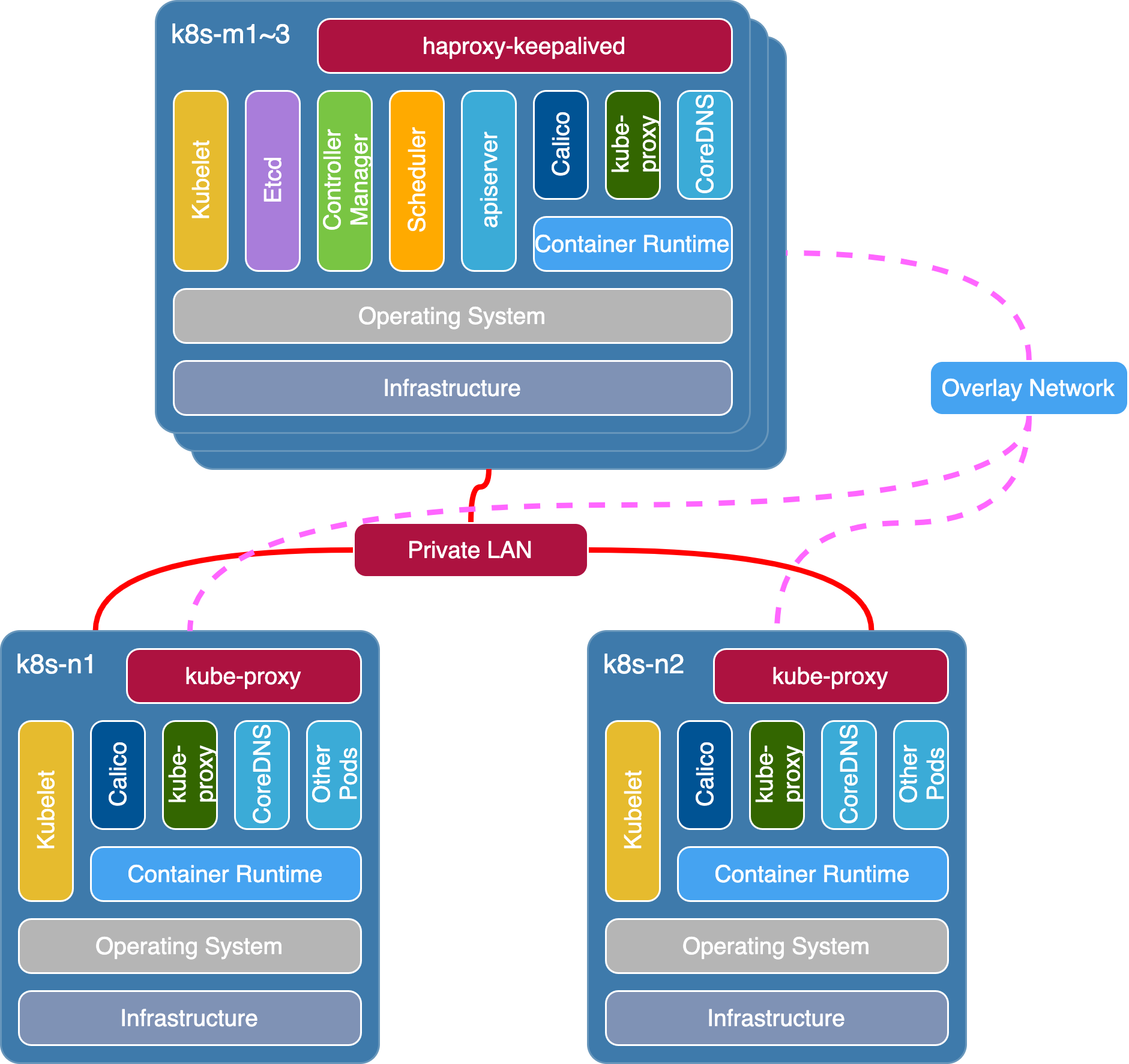

整體架構如下圖所示:

環境與軟體資訊 版本資訊

Kubernetes: v1.19.7

Calico: v3.8.2

Etcd: v3.3.8

Docker: 20.10.2

網路資訊

Cluster IP CIDR: 10.244.0.0/16

Service Cluster IP CIDR: 10.96.0.0/12

Service DNS IP: 10.96.0.10

DNS DN: cluster.local

Kubernetes API VIP: 192.168.20.20

節點資訊

IP Address

Hostname

CPU

Memory

192.168.20.10

k8s-m1

1

2GB

192.168.20.11

k8s-m2

1

2GB

192.168.20.12

k8s-m3

1

2GB

192.168.20.13

k8s-n1

1

2GB

192.168.20.14

k8s-n2

1

2GB

環境是在作者的工作主機上採用 VM 方式運行,因為硬體支援上的限制,因此將所有節點配置為 1 core, 2GB memory。實際運行建議針對自行需求與官方建議最低硬體規格進行更改。

前置作業 配置節點間傳輸採用 Key 方式 在安裝 Kubernetes Cluster 前需讓所有節點之間的驗證皆採用 key 方式,主要目的是方便在部署過程中,可以不必輸入密碼即可將配置檔案在節點之間傳輸,以加快與方便部署。因此在 k8s-m1, k8s-m2, k8s-m3, k8s-n1 與 k8s-n2 皆建立一對 public key 與 private key。

資訊可以直接按 enter 忽略到底

到 k8s-m1 機器並取得 public key

1 2 $ cat ~/.ssh/id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQC8wjXDlJD0MT1Y/4UAzs4MHCtOvMHDKjsECMgP3u4iemZrntpz247Qn0jZlhM1LBTIWZV6B3vPkfZ52uY0AW3we2prl4z2/E48AQi09D/RnU5h974EtXAaB0C6XfZwLjfzJmG+8iEVOPjcdUHK5ay4XOaJpncazvyt/B75pfeYIi6P8MxLropiDKLghcenmmQtXGDnNQ/wB/SfeOdcEoU4SiM8U65ZdHf8TaVhtODyat6jBktyFkrbgWLZPLEaz/eEe9b7+ybIpEqSV8TGLFGHi0xORGrpoD+3VnSfWRKu/emX1q9CDpRlFK+sCmftMasw1sOUBVLBbcD77pWPNA73HjwuVax/4JXMXwSdkuupZ3bB6nDn+xnuuMaNRFz/JLERCZx+RXup3Nvz9bGgtzTV4BAG806Q1tNW7ogm6GzCjd6pBBp58lEPHpmzhogRl1RdEHsIJjj96qfm6PQEmVuQWhrU9a8B7Fyh+VjifzaIJaDDAx0gs7d3gP/qRo2bsEU= root@k8s-m1

重複上述步驟,取得 k8s-m1, k8s-m2, k8s-m3, k8s-n1 與 k8s-n2 上所有的 public key 並寫入到 ~/.ssh/authorized_keys 檔案內,寫入完成後記得儲存並退出。

1 2 3 4 5 6 7 $ vim ~/.ssh/authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQC8wjXDlJD0MT1Y/4UAzs4MHCtOvMHDKjsECMgP3u4iemZrntpz247Qn0jZlhM1LBTIWZV6B3vPkfZ52uY0AW3we2prl4z2/E48AQi09D/RnU5h974EtXAaB0C6XfZwLjfzJmG+8iEVOPjcdUHK5ay4XOaJpncazvyt/B75pfeYIi6P8MxLropiDKLghcenmmQtXGDnNQ/wB/SfeOdcEoU4SiM8U65ZdHf8TaVhtODyat6jBktyFkrbgWLZPLEaz/eEe9b7+ybIpEqSV8TGLFGHi0xORGrpoD+3VnSfWRKu/emX1q9CDpRlFK+sCmftMasw1sOUBVLBbcD77pWPNA73HjwuVax/4JXMXwSdkuupZ3bB6nDn+xnuuMaNRFz/JLERCZx+RXup3Nvz9bGgtzTV4BAG806Q1tNW7ogm6GzCjd6pBBp58lEPHpmzhogRl1RdEHsIJjj96qfm6PQEmVuQWhrU9a8B7Fyh+VjifzaIJaDDAx0gs7d3gP/qRo2bsEU= root@k8s-m1 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDBEK6W4AbGoTJf3s78Vud22I3PJ7DowCUi94e0wTrzHc4OQ125ve+lVXHd7rb+tXNwe8taW9YFK1RTxsJo7zIyBPeLhpaGJEYh6TdG2C6lDurv+L2UnZx6pH4eqDJetDpHFfeHd+6Ih9c+oEmIYdBIYJGSsrGRmOq7HCbVgMgs49Is/bOZ2gpnGHvUzUCniq2WVjp5Ur4bSO+rF3UkC7c8dWQqN65ltRR1h6S/t5ZgLcB9+ipuxwxehosGTCsHY2tt9x24juYAqCzhFTjT+QIlvVsecFXyOS2kKlksruDCfCW4FEs+fgZF0AvktHvOSemw+R6POaBfi6RQcFgcQMFD6Eou1O2T5D6onRMprBHCPK2WEA/OjaKDKIsIe5SM/mkMf8pAM8GkxJbfnSj8zjnq0VvGfRivMeCMt4S084WNXMit3z4c2usa5ijXnCFSjtkqzwKXlFpIB+rGEBdOPPaPAOoHqk8bjHTVVjXRyVvQ0JxXiqMn10iYGBM722rYQj8= root@k8s-m2 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDJYVOpjomcDnjinpcEilH45X5ybZixLGy31cRdIR/bmJ0KJhikfnhGrF1mVlIJCDyJrEVMf3HZaG/EY14JJrW+XKcgnXtfcV/da52phY4aJtPWuSfaGezA3fr4DywE6R4JuHt0QfqEZjW8PeGMU5aXYEndxHiR/Ztk7dDw0qYR02T5CRbdYZmy8JHf9ERjwh904dan9mqkkgYNHZVu9ZbFFl9659U4wRWPrbYqcn5gVwxCpb+Pfan0RDujMwxooCVBJY84hnw7H6THqHLEyvQeZ/55n9CXGJoDwfUudzbWO7hkcHF0UdTAUA56yxga37s62qgeTrfBMN0laaq2I9rDX2AeMaAcrxXz+KGjEfEPNjhmqTqGCX9WOSLzz6FzqgW7f8Gstv8RL3zJ6FPjEosvozI9A1/D1II1B63saJULgRg22zz1PoEmXJqNE9HpT9uU3HpvyU4Urq08k8tpXc/wQV/XFWCQbfWlDsXLHtq0NttdKYgDFmorH4dzuDfavJ8= root@k8s-m3 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQC9ZNGaJ7mYgGTk8cSr+DdRN4l8PAi6DtnHFdSKEnClqq6gb2F2ubFezc9BCVo1qZygYEve1eZ+FkSDn3N/U5k1fD9sR4eFXtxSdzYkCVaWO4g/SGDXJCikqjI9UMvjbk0AypYmKtnjupsaPMmwV0j0seIHHjhF5sXZAYHXsXwRyglUWMHgWfhlLDNvTpWCRuOj+AKu6/afMReJv+AOlvMjoHewIGuq2TT3A/DXv+L3iivETx2PiCRKmLtrcgVhT+atAGdqqra8WHzmiW7vKssPMWtwXGad6CC18mO1Ym69VfTSaXEjLpHY1e7/keKx+dakBjHds9G3Nv1qiXuQrqMU99AQlpCFV1UakUt7qkwf3pAzlTv6/Y7WhP00Gcw8KCDM4kyNfJn9loJaHeYv5w8YsN7lBqUtvTRK0dHF22dvR7G3Itsd3taSWMhu9w4KhUFvz45l3958cza22Y/luj6IFOZ+UaWSkywj9Do68XfnowONQY+Ctt2Y2D4IZZiiT2M= root@k8s-n1 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCqKk7tZOwjcXae7xW5hr3gYpcEof9+C4QULEIEgbQWra/RUGKOp0Hvj0/JoBZmGwXvKSiRdQ1DizS0Y1vrCUpi1aYwIwjTs7cyJQ3Q5t763yFMA3CfeM7lfs8AXhRmimWjkUAyXcOeJ+I7h8yxEaN7EBP/ylv5dw/lD/qr38v3fojYlPCbcqwC8y8R0zzla6QVVBXy5ImxhONV53erAcp1o7z0IKIt+6MECb7SebWBqlfcfO7jMM60bOSFKogSgH3Rb/tIeXEf0FY4n4lplptJxohhA2/1GhM6E48soaY+SL5J4FmLlhxO3bs0RwKmvusUbt8qTMwn78dZXVfnP8dNGnf6O5I7vC+cOI/JvmKngjI5i3xxUSvackBv80HyytfedhFeV+effjjJvKYwHvfis8+lg3iGXP4gYfOSffWl4IoBo+G0XO/20+z06/A4/ep6O2qe+SeOfdz+b2gzC4UxJWFfI3MbHljn5QKuCRst93wKzy19bsfuhr+1+Q1hdZU= root@k8s-n2

切記讀者勿直接使用上面例子的 public key 直接寫到 ~/.ssh/authorized_keys 目錄,需自行透過前一步驟產生後,取得您機器的 public key 再行寫入。

重複上述步驟,將其分別複製到 k8s-m2, k8s-m3, k8s-n1 與 k8s-n2 的 ~/.ssh/authorized_keys 檔案內。

完成後,將所有節點的 IP Address 與 Hostname 註冊到 k8s-m1, k8s-m2, k8s-m3, k8s-n1 與 k8s-n2 節點。

1 2 3 4 5 6 7 8 $ vim /etc/hosts ... 192.168.20.10 k8s-m1 192.168.20.11 k8s-m2 192.168.20.12 k8s-m3 192.168.20.13 k8s-n1 192.168.20.14 k8s-n2

針對讀者自行分配至 IP Address 與 Hostname 寫入到所有節點的 /etc/hosts 檔案內最下方。

完成上述所有步驟後,確認所有節點彼此間登入皆不需要密碼即可登入。

1 2 3 4 $ ssh k8s-m2 The authenticity of host 'k8s-m2 (192.168.20.11)' can't be established. ECDSA key fingerprint is SHA256:FtCGiDnzzrbYlo8+1w8nPocBOm1fX92/5YUbRItfN60. Are you sure you want to continue connecting (yes/no/[fingerprint])?

第一次登入會有如上的訊息,輸入 yes 即可。

安裝 Docker CE 在安裝 Kubernetes 前,需要選擇一種 CRI 安裝在 k8s-m1, k8s-m2, k8s-m3, k8s-n1 與 k8s-n2 以提供容器運行,常見如:Docker, Containerd, CRI-O 等等。本篇採用 Docker 作為示範。

更新與安裝相依套件。

1 2 3 4 5 6 7 $ apt update $ apt install -y \ apt-transport-https \ ca-certificates \ curl \ gnupg-agent \ software-properties-common

加入 Docker 官方 GPG Key。

1 $ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

設定安裝 stable 版本。

1 2 3 4 $ sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable"

更新並安裝 Docker CE。

1 2 $ apt update $ apt install -y docker-ce docker-ce-cli containerd.io

安裝完畢後,確認 Docker 環境。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 $ docker version Client: Docker Engine - Community Version: 20.10.3 API version: 1.41 Go version: go1.13.15 Git commit: 48d30b5 Built: Fri Jan 29 14:33:21 2021 OS/Arch: linux/amd64 Context: default Experimental: true Server: Docker Engine - Community Engine: Version: 20.10.3 API version: 1.41 (minimum version 1.12) Go version: go1.13.15 Git commit: 46229ca Built: Fri Jan 29 14:31:32 2021 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.4.3 GitCommit: 269548fa27e0089a8b8278fc4fc781d7f65a939b runc: Version: 1.0.0-rc92 GitCommit: ff819c7e9184c13b7c2607fe6c30ae19403a7aff docker-init: Version: 0.19.0 GitCommit: de40ad0

編譯 Kubernetes Source Codes(僅在 k8s-m1 操作) 安裝相關套件 在編譯 Kubernetes 時,需具備 make 指令,與因為部分 source codes 為 C 語言,因此需要安裝 gcc 套件。

1 2 $ apt update $ apt install -y make gcc

安裝 Golang 因為本篇介紹內容包含如何從 Source Codes 編譯出執行檔,因此需要具備 Golang 環境才能進行編譯。我們選擇使用 Golang 1.15.7 版本,可以依照需求自行調整。

下載 Golang 1.15.7

1 $ wget https://golang.org/dl/go1.15.7.linux-amd64.tar.gz

下載完成後,對 go1.15.7.linux-amd64.tar.gz 檔案進行解壓縮,並儲存至 /usr/local/ 目錄。

1 $ tar -C /usr/local -xzf go1.15.7.linux-amd64.tar.gz

將 /usr/local/go/bin 目錄加至 PATH 環境變數中。

1 $ export PATH=$PATH:/usr/local/go/bin

確認當前 Golang 環境是否可以正常使用。

下載與編譯 Kubernetes Source Codes 從 Kubernetes Github 選擇想要安裝的版本,本篇以 v1.19.7 為例,下載對應的 .tar.gz 檔案。

1 $ wget https://github.com/kubernetes/kubernetes/archive/v1.19.7.tar.gz

下載完成後,對 v1.19.7.tar.gz 檔案進行解壓縮。

1 $ tar -xvf v1.19.7.tar.gz

解壓縮完成後,可以在當前目錄看到 kubernetes-1.19.7 目錄。進入 kubernetes-1.19.7 目錄,進行執行檔編譯。

1 2 3 $ cd kubernetes-1.19.7 $ make $ cd ..

編譯過程需要一些時間 …

完成編譯後,可以在 kubernetes-1.19.7 目錄裡面看到多出一個 _output 目錄,接著我們進入到 kubernetes-1.19.7/_output/bin/ 目錄底下可以看到所有被編譯出來的執行檔。

1 2 3 $ ls kubernetes-1.19.7/_output/bin apiextensions-apiserver deepcopy-gen e2e_node.test gendocs genman genyaml go2make go-runner kube-aggregator kube-controller-manager kubelet kube-proxy linkcheck openapi-gen conversion-gen defaulter-gen e2e.test genkubedocs genswaggertypedocs ginkgo go-bindata kubeadm kube-apiserver kubectl kubemark kube-scheduler mounter prerelease-lifecycle-gen

將執行檔複製到對應的節點。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ cp kubernetes-1.19.7/_output/bin/kubectl /usr/local/bin $ cp kubernetes-1.19.7/_output/bin/kube-apiserver /usr/local/bin $ cp kubernetes-1.19.7/_output/bin/kube-scheduler /usr/local/bin $ cp kubernetes-1.19.7/_output/bin/kube-controller-manager /usr/local/bin $ cp kubernetes-1.19.7/_output/bin/kubelet /usr/local/bin $ scp kubernetes-1.19.7/_output/bin/kubectl k8s-m2:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-apiserver k8s-m2:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-scheduler k8s-m2:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-controller-manager k8s-m2:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kubelet k8s-m2:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kubectl k8s-m3:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-apiserver k8s-m3:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-scheduler k8s-m3:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kube-controller-manager k8s-m3:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kubelet k8s-m3:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kubelet k8s-n1:/usr/local/bin/ $ scp kubernetes-1.19.7/_output/bin/kubelet k8s-n2:/usr/local/bin/

建立 buildrc 環境變數檔,方便後續安裝使用。

1 2 3 4 5 6 7 8 9 10 $ vim buildrc CFSSL_URL=https://pkg.cfssl.org/R1.2 DIR=/etc/etcd/ssl K8S_DIR=/etc/kubernetes PKI_DIR=${K8S_DIR}/pki KUBE_APISERVER=https://192.168.20.20:6443 TOKEN_ID=4a378c TOKEN_SECRET=0c0751149cb12dd1 BOOTSTRAP_TOKEN=${TOKEN_ID}.${TOKEN_SECRET}

TOKEN_ID 可以使用 openssl rand -hex 3 指令產生。

TOKEN_SECRET 可以使用 openssl rand -hex 8 指令產生。

建立完成後,將環境變數檔寫入環境變數中。

建立 CA 與產生 TLS 憑證(僅在 k8s-m1 操作) 安裝 cfssl 工具,這將會用來建立 CA ,並產生 TLS 憑證。

1 2 3 $ wget ${CFSSL_URL}/cfssl_linux-amd64 -O /usr/local/bin/cfssl $ wget ${CFSSL_URL}/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson $ chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

Etcd 建立 etcd-ca-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim etcd-ca-csr.json { "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "etcd", "OU": "Etcd Security" } ] }

建立 /etc/etcd/ssl 目錄並產生 Etcd CA。

1 2 3 4 5 6 7 8 9 $ mkdir -p ${DIR} $ cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare ${DIR}/etcd-ca 2021/02/03 06:57:49 [INFO] generating a new CA key and certificate from CSR 2021/02/03 06:57:49 [INFO] generate received request 2021/02/03 06:57:49 [INFO] received CSR 2021/02/03 06:57:49 [INFO] generating key: rsa-2048 2021/02/03 06:57:50 [INFO] encoded CSR 2021/02/03 06:57:50 [INFO] signed certificate with serial number 32327654678613319205867135393400903527408111164

產生 CA 完成後,建立 ca-config.json 與 etcd-csr.json 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 $ vim ca-config.json { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "87600h" } } } } $ vim etcd-csr.json { "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "etcd", "OU": "Etcd Security" } ] }

產生 Etcd 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 $ cfssl gencert \ -ca=${DIR}/etcd-ca.pem \ -ca-key=${DIR}/etcd-ca-key.pem \ -config=ca-config.json \ -hostname=127.0.0.1,192.168.20.10,192.168.20.11,192.168.20.12,192.168.20.20 \ -profile=kubernetes \ etcd-csr.json | cfssljson -bare ${DIR}/etcd 2021/02/03 07:04:11 [INFO] generate received request 2021/02/03 07:04:11 [INFO] received CSR 2021/02/03 07:04:11 [INFO] generating key: rsa-2048 2021/02/03 07:04:11 [INFO] encoded CSR 2021/02/03 07:04:11 [INFO] signed certificate with serial number 66652898153558259936883336779780180424154374600

刪除不必要的檔案,並檢查 /etc/etcd/ssl 目錄。

1 2 3 $ rm -rf ${DIR}/*.csr $ ls /etc/etcd/ssl etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem

在 k8s-m2 與 k8s-m3 建立 /etc/etcd/ssl 目錄。

1 $ mkdir -p /etc/etcd/ssl

完成建立後,將 k8s-m1 上建立的 etcd 憑證複製到 k8s-m2 與 k8s-m3。

1 2 3 4 5 6 7 8 9 10 11 12 13 $ scp /etc/etcd/ssl/* root@k8s-m2:/etc/etcd/ssl/ etcd-ca-key.pem 100% 1675 1.3MB/s 00:00 etcd-ca.pem 100% 1359 2.5MB/s 00:00 etcd-key.pem 100% 1675 3.0MB/s 00:00 etcd.pem 100% 1444 2.2MB/s 00:00 $ scp /etc/etcd/ssl/* root@k8s-m3:/etc/etcd/ssl/ etcd-ca-key.pem 100% 1675 1.3MB/s 00:00 etcd-ca.pem 100% 1359 2.5MB/s 00:00 etcd-key.pem 100% 1675 3.0MB/s 00:00 etcd.pem 100% 1444 2.2MB/s 00:00

K8s 元件 建立 ca-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim ca-csr.json { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "Kubernetes", "OU": "Kubernetes-manual" } ] }

建立 /etc/kubernetes/pki 目錄並產生 CA

1 2 3 4 5 6 7 8 9 10 11 12 13 $ mkdir -p ${PKI_DIR} $ cfssl gencert -initca ca-csr.json | cfssljson -bare ${PKI_DIR}/ca 2021/02/03 07:16:02 [INFO] generating a new CA key and certificate from CSR 2021/02/03 07:16:02 [INFO] generate received request 2021/02/03 07:16:02 [INFO] received CSR 2021/02/03 07:16:02 [INFO] generating key: rsa-2048 2021/02/03 07:16:02 [INFO] encoded CSR 2021/02/03 07:16:02 [INFO] signed certificate with serial number 528600369434105202111308119706767425710162291488 $ ls ${PKI_DIR}/ca*.pem /etc/kubernetes/pki/ca-key.pem /etc/kubernetes/pki/ca.pem

KUBE_APISERVER 為 Kubernetes API VIP

API Server 憑證主要是因為 Kubelet 與 API Server 之間構通採用 TLS 方式,需驗證並符合才能進行存取。

建立 apiserver-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim apiserver-csr.json { "CN": "kube-apiserver", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "Kubernetes", "OU": "Kubernetes-manual" } ] }

產生 CA。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -hostname=10.96.0.1,192.168.20.20,127.0.0.1,kubernetes.default \ -profile=kubernetes \ apiserver-csr.json | cfssljson -bare ${PKI_DIR}/apiserver 2021/02/03 07:20:17 [INFO] generate received request 2021/02/03 07:20:17 [INFO] received CSR 2021/02/03 07:20:17 [INFO] generating key: rsa-2048 2021/02/03 07:20:17 [INFO] encoded CSR 2021/02/03 07:20:17 [INFO] signed certificate with serial number 85874010554668635601954999407030722935833248735 $ ls ${PKI_DIR}/apiserver*.pem /etc/kubernetes/pki/apiserver-key.pem /etc/kubernetes/pki/apiserver.pem

Front Proxy Client 憑證使用於 Authenticating Proxy。

建立 front-proxy-ca-csr.json 與 front-proxy-client-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 $ vim front-proxy-ca-csr.json { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 } } $ vim front-proxy-client-csr.json { "CN": "front-proxy-client", "key": { "algo": "rsa", "size": 2048 } }

產生 CA。

1 2 3 4 5 6 7 8 9 10 11 12 $ cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare ${PKI_DIR}/front-proxy-ca 2021/02/03 07:37:06 [INFO] generating a new CA key and certificate from CSR 2021/02/03 07:37:06 [INFO] generate received request 2021/02/03 07:37:06 [INFO] received CSR 2021/02/03 07:37:06 [INFO] generating key: rsa-2048 2021/02/03 07:37:06 [INFO] encoded CSR 2021/02/03 07:37:06 [INFO] signed certificate with serial number 481641924005786643792808437563979167221771356108 $ ls ${PKI_DIR}/front-proxy-ca*.pem /etc/kubernetes/pki/front-proxy-ca-key.pem /etc/kubernetes/pki/front-proxy-ca.pem

產生 Front proxy client 憑證

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ cfssl gencert \ -ca=${PKI_DIR}/front-proxy-ca.pem \ -ca-key=${PKI_DIR}/front-proxy-ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ front-proxy-client-csr.json | cfssljson -bare ${PKI_DIR}/front-proxy-client 2021/02/03 07:39:24 [INFO] generate received request 2021/02/03 07:39:24 [INFO] received CSR 2021/02/03 07:39:24 [INFO] generating key: rsa-2048 2021/02/03 07:39:24 [INFO] encoded CSR 2021/02/03 07:39:24 [INFO] signed certificate with serial number 1905061514940697505880815112674117856971444676 2021/02/03 07:39:24 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). $ ls ${PKI_DIR}/front-proxy-client*.pem /etc/kubernetes/pki/front-proxy-client-key.pem /etc/kubernetes/pki/front-proxy-client.pem

Controller Manager 憑證主要讓 Controller Manager 能與 API Server 進行溝通。

建立 manager-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 vim manager-csr.json { "CN": "system:kube-controller-manager", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "system:kube-controller-manager", "OU": "Kubernetes-manual" } ] }

產生 Controller Manager 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ manager-csr.json | cfssljson -bare ${PKI_DIR}/controller-manager 2021/02/03 07:43:42 [INFO] generate received request 2021/02/03 07:43:42 [INFO] received CSR 2021/02/03 07:43:42 [INFO] generating key: rsa-2048 2021/02/03 07:43:42 [INFO] encoded CSR 2021/02/03 07:43:42 [INFO] signed certificate with serial number 418276260904613293449744811510235026978328799057 2021/02/03 07:43:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). $ ls ${PKI_DIR}/controller-manager*.pem /etc/kubernetes/pki/controller-manager-key.pem /etc/kubernetes/pki/controller-manager.pem

接著利用 kubectl 產生 Controller 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/controller-manager.conf Cluster "kubernetes" set. $ kubectl config set-credentials system:kube-controller-manager \ --client-certificate=${PKI_DIR}/controller-manager.pem \ --client-key=${PKI_DIR}/controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/controller-manager.conf User "system:kube-controller-manager" set. $ kubectl config set-context system:kube-controller-manager@kubernetes \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=${K8S_DIR}/controller-manager.conf Context "system:kube-controller-manager@kubernetes" created. $ kubectl config use-context system:kube-controller-manager@kubernetes \ --kubeconfig=${K8S_DIR}/controller-manager.conf Switched to context "system:kube-controller-manager@kubernetes".

Scheduler 憑證會建立 system:kube-scheduler 使用者,並且綁定 RBAC Cluster Role 中的 system:kube-scheduler 讓 Scheduler 元件可以與 API Server 溝通。

建立 manager-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim scheduler-csr.json { "CN": "system:kube-scheduler", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "system:kube-scheduler", "OU": "Kubernetes-manual" } ] }

產生 Scheduler 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ scheduler-csr.json | cfssljson -bare ${PKI_DIR}/scheduler 2021/02/04 02:24:52 [INFO] generate received request 2021/02/04 02:24:52 [INFO] received CSR 2021/02/04 02:24:52 [INFO] generating key: rsa-2048 2021/02/04 02:24:53 [INFO] encoded CSR 2021/02/04 02:24:53 [INFO] signed certificate with serial number 466136919804747115564144841197200619289748718364 2021/02/04 02:24:53 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). $ ls ${PKI_DIR}/scheduler*.pem /etc/kubernetes/pki/scheduler-key.pem /etc/kubernetes/pki/scheduler.pem

接著利用 kubectl 產生 Scheduler 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/scheduler.conf Cluster "kubernetes" set. $ kubectl config set-credentials system:kube-scheduler \ --client-certificate=${PKI_DIR}/scheduler.pem \ --client-key=${PKI_DIR}/scheduler-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/scheduler.conf User "system:kube-scheduler" set. $ kubectl config set-context system:kube-scheduler@kubernetes \ --cluster=kubernetes \ --user=system:kube-scheduler \ --kubeconfig=${K8S_DIR}/scheduler.conf Context "system:kube-scheduler@kubernetes" created. $ kubectl config use-context system:kube-scheduler@kubernetes \ --kubeconfig=${K8S_DIR}/scheduler.conf Switched to context "system:kube-scheduler@kubernetes".

Admin Admin 被用來綁定 RBAC Cluster Role 中的 cluster-admin,若使用者想要針對 Kubernetes Cluster 進行任何操作,都需要透過 kubeconfig。

建立 admin-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim admin-csr.json { "CN": "admin", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "ST": "Taipei", "L": "Taipei", "O": "system:masters", "OU": "Kubernetes-manual" } ] }

產生 Kubernetes Admin 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ admin-csr.json | cfssljson -bare ${PKI_DIR}/admin 2021/02/04 02:31:44 [INFO] generate received request 2021/02/04 02:31:44 [INFO] received CSR 2021/02/04 02:31:44 [INFO] generating key: rsa-2048 2021/02/04 02:31:45 [INFO] encoded CSR 2021/02/04 02:31:45 [INFO] signed certificate with serial number 716277526431619847152658152532600653141842093439 2021/02/04 02:31:45 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). $ ls ${PKI_DIR}/admin*.pem /etc/kubernetes/pki/admin-key.pem /etc/kubernetes/pki/admin.pem

接著利用 kubectl 產生 Admin 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/admin.conf Cluster "kubernetes" set. $ kubectl config set-credentials kubernetes-admin \ --client-certificate=${PKI_DIR}/admin.pem \ --client-key=${PKI_DIR}/admin-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/admin.conf User "kubernetes-admin" set. $ kubectl config set-context kubernetes-admin@kubernetes \ --cluster=kubernetes \ --user=kubernetes-admin \ --kubeconfig=${K8S_DIR}/admin.conf Context "kubernetes-admin@kubernetes" created. $ kubectl config use-context kubernetes-admin@kubernetes \ --kubeconfig=${K8S_DIR}/admin.conf Switched to context "kubernetes-admin@kubernetes".

Masters Kubelet 採用 Node Authorization 來讓 Master 能存取 API Server。

建立 kubelet-k8s-m1-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim kubelet-k8s-m1-csr.json { "CN": "system:node:k8s-m1", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "L": "Taipei", "ST": "Taipei", "O": "system:nodes", "OU": "Kubernetes-manual" } ] }

產生 Kubelet k8s-m1 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -hostname=k8s-m1 \ -profile=kubernetes \ kubelet-k8s-m1-csr.json | cfssljson -bare ${PKI_DIR}/kubelet-k8s-m1; 2021/02/04 05:43:14 [INFO] generate received request 2021/02/04 05:43:14 [INFO] received CSR 2021/02/04 05:43:14 [INFO] generating key: rsa-2048 2021/02/04 05:43:14 [INFO] encoded CSR 2021/02/04 05:43:14 [INFO] signed certificate with serial number 88551402085838487825161450787890905954599729836

建立 kubelet-k8s-m2-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim kubelet-k8s-m2-csr.json { "CN": "system:node:k8s-m2", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "L": "Taipei", "ST": "Taipei", "O": "system:nodes", "OU": "Kubernetes-manual" } ] }

產生 Kubelet k8s-m2 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -hostname=k8s-m2 \ -profile=kubernetes \ kubelet-k8s-m2-csr.json | cfssljson -bare ${PKI_DIR}/kubelet-k8s-m2; 2021/02/04 05:46:01 [INFO] generate received request 2021/02/04 05:46:01 [INFO] received CSR 2021/02/04 05:46:01 [INFO] generating key: rsa-2048 2021/02/04 05:46:01 [INFO] encoded CSR 2021/02/04 05:46:01 [INFO] signed certificate with serial number 631793009516906574893487594475892882904568266495

建立 kubelet-k8s-m3-csr.json 檔案並寫入如下內容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ vim kubelet-k8s-m3-csr.json { "CN": "system:node:k8s-m3", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "TW", "L": "Taipei", "ST": "Taipei", "O": "system:nodes", "OU": "Kubernetes-manual" } ] }

產生 Kubelet k8s-m3 憑證。

1 2 3 4 5 6 7 8 9 10 11 12 13 $ cfssl gencert \ -ca=${PKI_DIR}/ca.pem \ -ca-key=${PKI_DIR}/ca-key.pem \ -config=ca-config.json \ -hostname=k8s-m3 \ -profile=kubernetes \ kubelet-k8s-m3-csr.json | cfssljson -bare ${PKI_DIR}/kubelet-k8s-m3; 2021/02/04 05:46:43 [INFO] generate received request 2021/02/04 05:46:43 [INFO] received CSR 2021/02/04 05:46:43 [INFO] generating key: rsa-2048 2021/02/04 05:46:43 [INFO] encoded CSR 2021/02/04 05:46:43 [INFO] signed certificate with serial number 313125539741502293210284542097458112530534317871

刪除不必要的檔案。

1 2 3 $ rm -rf ${PKI_DIR}/kubelet-k8s-m1-csr.json $ rm -rf ${PKI_DIR}/kubelet-k8s-m2-csr.json $ rm -rf ${PKI_DIR}/kubelet-k8s-m3-csr.json

檢視已建立的憑證。

1 2 3 $ ls ${PKI_DIR}/kubelet*.pem /etc/kubernetes/pki/kubelet-k8s-m1-key.pem /etc/kubernetes/pki/kubelet-k8s-m1.pem /etc/kubernetes/pki/kubelet-k8s-m2-key.pem /etc/kubernetes/pki/kubelet-k8s-m2.pem /etc/kubernetes/pki/kubelet-k8s-m3-key.pem /etc/kubernetes/pki/kubelet-k8s-m3.pem

在 k8s-m1 上更改憑證名稱。

1 2 3 $ cp ${PKI_DIR}/kubelet-k8s-m1-key.pem ${PKI_DIR}/kubelet-key.pem $ cp ${PKI_DIR}/kubelet-k8s-m1.pem ${PKI_DIR}/kubelet.pem $ rm ${PKI_DIR}/kubelet-k8s-m1-key.pem ${PKI_DIR}/kubelet-k8s-m1.pem

在 k8s-m2 建立 /etc/kubernetes/pki 目錄。

1 $ mkdir -p /etc/kubernetes/pki

從 k8s-m1 上將憑證複製到 k8s-m2。

1 2 3 $ scp ${PKI_DIR}/ca.pem k8s-m2:${PKI_DIR}/ca.pem $ scp ${PKI_DIR}/kubelet-k8s-m2-key.pem k8s-m2:${PKI_DIR}/kubelet-key.pem $ scp ${PKI_DIR}/kubelet-k8s-m2.pem k8s-m2:${PKI_DIR}/kubelet.pem

在 k8s-m3 建立 /etc/kubernetes/pki 目錄。

1 $ mkdir -p /etc/kubernetes/pki

從 k8s-m1 上將憑證複製到 k8s-m3。

1 2 3 $ scp ${PKI_DIR}/ca.pem k8s-m3:${PKI_DIR}/ca.pem $ scp ${PKI_DIR}/kubelet-k8s-m3-key.pem k8s-m3:${PKI_DIR}/kubelet-key.pem $ scp ${PKI_DIR}/kubelet-k8s-m3.pem k8s-m3:${PKI_DIR}/kubelet.pem

在 k8s-m1 刪除不必要的檔案。

1 2 $ rm ${PKI_DIR}/kubelet-k8s-m2-key.pem ${PKI_DIR}/kubelet-k8s-m2.pem $ rm ${PKI_DIR}/kubelet-k8s-m3-key.pem ${PKI_DIR}/kubelet-k8s-m3.pem

接著在 k8s-m1 利用 kubectl 產生 Kubelet 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/kubelet.conf Cluster "kubernetes" set. $ kubectl config set-credentials system:node:k8s-m1 \ --client-certificate=${PKI_DIR}/kubelet.pem \ --client-key=${PKI_DIR}/kubelet-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/kubelet.conf User "system:node:k8s-m1" set. $ kubectl config set-context system:node:k8s-m1@kubernetes \ --cluster=kubernetes \ --user=system:node:k8s-m1 \ --kubeconfig=${K8S_DIR}/kubelet.conf Context "system:node:k8s-m1@kubernetes" created. $ kubectl config use-context system:node:k8s-m1@kubernetes \ --kubeconfig=${K8S_DIR}/kubelet.conf Switched to context "system:node:k8s-m1@kubernetes".

接著從 k8s-m1 複製 buildrc 到 k8s-m2 節點。

1 $ scp buildrc k8s-m2:/home/vagrant

在 k8s-m2 利用 kubectl 產生 Kubelet 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 $ source /home/vagrant/buildrc $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/kubelet.conf Cluster "kubernetes" set. $ kubectl config set-credentials system:node:k8s-m2 \ --client-certificate=${PKI_DIR}/kubelet.pem \ --client-key=${PKI_DIR}/kubelet-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/kubelet.conf User "system:node:k8s-m2" set. $ kubectl config set-context system:node:k8s-m2@kubernetes \ --cluster=kubernetes \ --user=system:node:k8s-m2 \ --kubeconfig=${K8S_DIR}/kubelet.conf Context "system:node:k8s-m2@kubernetes" created. $ kubectl config use-context system:node:k8s-m2@kubernetes \ --kubeconfig=${K8S_DIR}/kubelet.conf Switched to context "system:node:k8s-m2@kubernetes".

接著從 k8s-m1 複製 buildrc 到 k8s-m3 節點。

1 $ scp buildrc k8s-m3:/home/vagrant

在 k8s-m3 利用 kubectl 產生 Kubelet 的 kubeconfig 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 $ source /home/vagrant/buildrc $ kubectl config set-cluster kubernetes \ --certificate-authority=${PKI_DIR}/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${K8S_DIR}/kubelet.conf Cluster "kubernetes" set. $ kubectl config set-credentials system:node:k8s-m3 \ --client-certificate=${PKI_DIR}/kubelet.pem \ --client-key=${PKI_DIR}/kubelet-key.pem \ --embed-certs=true \ --kubeconfig=${K8S_DIR}/kubelet.conf User "system:node:k8s-m3" set. $ kubectl config set-context system:node:k8s-m3@kubernetes \ --cluster=kubernetes \ --user=system:node:k8s-m3 \ --kubeconfig=${K8S_DIR}/kubelet.conf Context "system:node:k8s-m3@kubernetes" created. $ kubectl config use-context system:node:k8s-m3@kubernetes \ --kubeconfig=${K8S_DIR}/kubelet.conf Switched to context "system:node:k8s-m3@kubernetes".

Service Account Key Kubernetes Controller Manager 利用 Key pair 來產生與簽署 Service Account 的 tokens,建立一組公私鑰來讓 API Server 與 Controller Manager 使用。

在 k8s-m1 建立一組 keys。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 $ openssl genrsa -out ${PKI_DIR}/sa.key 2048 Generating RSA private key, 2048 bit long modulus (2 primes) ........................................................+++++ ..................+++++ e is 65537 (0x010001) $ openssl rsa -in ${PKI_DIR}/sa.key -pubout -out ${PKI_DIR}/sa.pub writing RSA key $ ls ${PKI_DIR}/sa.* /etc/kubernetes/pki/sa.key /etc/kubernetes/pki/sa.pub

將 keys 從 k8s-m1 複製到 k8s-m2 與 k8s-m3。

1 2 $ scp ${PKI_DIR}/sa.key ${PKI_DIR}/sa.pub k8s-m2:${PKI_DIR}/ $ scp ${PKI_DIR}/sa.key ${PKI_DIR}/sa.pub k8s-m3:${PKI_DIR}/

將 kubeconfig 從 k8s-m1 複製到 k8s-m2 與 k8s-m3。

1 2 $ scp ${K8S_DIR}/admin.conf ${K8S_DIR}/controller-manager.conf ${K8S_DIR}/scheduler.conf k8s-m2:${K8S_DIR}/ $ scp ${K8S_DIR}/admin.conf ${K8S_DIR}/controller-manager.conf ${K8S_DIR}/scheduler.conf k8s-m3:${K8S_DIR}/

HAProxy HAProxy 主要的目的在於 Kubernetes Cluster 具備 k8s-m1, k8s-m2 與 k8s-m3 三個 Master,並且上面運行 API Server,我們希望可以透過 VIP 方式將 Request 平均分配到每個 API Server 上,因此藉由 HAProxy 達到 Load Balance 效果。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

更新套件。

安裝 HAProxy。

1 $ apt install -y haproxy

安裝完成後,可以檢視當前 HAProxy 安裝版本。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 $ apt info haproxy -a Package: haproxy Version: 2.0.13-2ubuntu0.1 Priority: optional Section: net Origin: Ubuntu Maintainer: Ubuntu Developers <ubuntu-devel-discuss@lists.ubuntu.com> Original-Maintainer: Debian HAProxy Maintainers <haproxy@tracker.debian.org> Bugs: https://bugs.launchpad.net/ubuntu/+filebug Installed-Size: 3,287 kB Pre-Depends: dpkg (>= 1.17.14) Depends: libc6 (>= 2.17), libcrypt1 (>= 1:4.1.0), libgcc-s1 (>= 3.0), liblua5.3-0, libpcre2-8-0 (>= 10.22), libssl1.1 (>= 1.1.1), libsystemd0, zlib1g (>= 1:1.1.4), adduser, lsb-base (>= 3.0-6) Suggests: vim-haproxy, haproxy-doc Homepage: http://www.haproxy.org/ Download-Size: 1,519 kB APT-Manual-Installed: yes APT-Sources: http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages Description: fast and reliable load balancing reverse proxy HAProxy is a TCP/HTTP reverse proxy which is particularly suited for high availability environments. It features connection persistence through HTTP cookies, load balancing, header addition, modification, deletion both ways. It has request blocking capabilities and provides interface to display server status. Package: haproxy Version: 2.0.13-2 Priority: optional Section: net Origin: Ubuntu Maintainer: Ubuntu Developers <ubuntu-devel-discuss@lists.ubuntu.com> Original-Maintainer: Debian HAProxy Maintainers <haproxy@tracker.debian.org> Bugs: https://bugs.launchpad.net/ubuntu/+filebug Installed-Size: 3,287 kB Pre-Depends: dpkg (>= 1.17.14) Depends: libc6 (>= 2.17), libcrypt1 (>= 1:4.1.0), libgcc-s1 (>= 3.0), liblua5.3-0, libpcre2-8-0 (>= 10.22), libssl1.1 (>= 1.1.1), libsystemd0, zlib1g (>= 1:1.1.4), adduser, lsb-base (>= 3.0-6) Suggests: vim-haproxy, haproxy-doc Homepage: http://www.haproxy.org/ Download-Size: 1,519 kB APT-Sources: http://archive.ubuntu.com/ubuntu focal/main amd64 Packages Description: fast and reliable load balancing reverse proxy HAProxy is a TCP/HTTP reverse proxy which is particularly suited for high availability environments. It features connection persistence through HTTP cookies, load balancing, header addition, modification, deletion both ways. It has request blocking capabilities and provides interface to display server status.

配置 /etc/haproxy/haproxy.cfg 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 $ vim /etc/haproxy/haproxy.cfg ... frontend kube-apiserver-https mode tcp bind :6443 default_backend kube-apiserver-backend backend kube-apiserver-backend mode tcp server k8s-m1-api 192.168.20.10:5443 check server k8s-m2-api 192.168.20.11:5443 check server k8s-m3-api 192.168.20.12:5443 check frontend etcd-frontend mode tcp bind :2381 default_backend etcd-backend backend etcd-backend mode tcp server k8s-m1-api 192.168.20.10:2379 check server k8s-m2-api 192.168.20.11:2379 check server k8s-m3-api 192.168.20.12:2379 check

將上述配置寫入 /etc/haproxy/haproxy.cfg 檔案最底部。

啟動 HAProxy。

1 2 $ systemctl enable haproxy $ systemctl start haproxy

檢視 HAProxy 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ systemctl status haproxy ● haproxy.service - HAProxy Load Balancer Loaded: loaded (/lib/systemd/system/haproxy.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 02:43:44 UTC; 11min ago Docs: man:haproxy(1) file:/usr/share/doc/haproxy/configuration.txt.gz Main PID: 38407 (haproxy) Tasks: 2 (limit: 2281) Memory: 1.8M CGroup: /system.slice/haproxy.service ├─38407 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock └─38408 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock Feb 04 02:43:44 k8s-m1 systemd[1]: Starting HAProxy Load Balancer... Feb 04 02:43:44 k8s-m1 haproxy[38407]: [NOTICE] 034/024344 (38407) : New worker #1 (38408) forked Feb 04 02:43:44 k8s-m1 systemd[1]: Started HAProxy Load Balancer.

KeepAlived KeepAlived 主要負責在網卡上提供一個 VIP,讓所有服務皆可以透過 VIP 進行服務存取。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

安裝 Keepalived。

1 $ apt install -y keepalived

安裝完成後,可以檢視當前 Keepalived 安裝版本。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 $ apt info keepalived -a Package: keepalived Version: 1:2.0.19-2 Priority: optional Section: admin Origin: Ubuntu Maintainer: Ubuntu Developers <ubuntu-devel-discuss@lists.ubuntu.com> Original-Maintainer: Alexander Wirt <formorer@debian.org> Bugs: https://bugs.launchpad.net/ubuntu/+filebug Installed-Size: 1,249 kB Pre-Depends: init-system-helpers (>= 1.54~) Depends: iproute2, libc6 (>= 2.28), libglib2.0-0 (>= 2.26.0), libmnl0 (>= 1.0.3-4~), libnftnl11 (>= 1.1.2), libnl-3-200 (>= 3.2.27), libnl-genl-3-200 (>= 3.2.7), libpcre2-8-0 (>= 10.22), libsnmp35 (>= 5.8+dfsg), libssl1.1 (>= 1.1.0) Recommends: ipvsadm Homepage: http://keepalived.org Download-Size: 360 kB APT-Manual-Installed: yes APT-Sources: http://archive.ubuntu.com/ubuntu focal/main amd64 Packages Description: Failover and monitoring daemon for LVS clusters keepalived is used for monitoring real servers within a Linux Virtual Server (LVS) cluster. keepalived can be configured to remove real servers from the cluster pool if it stops responding, as well as send a notification email to make the admin aware of the service failure. . In addition, keepalived implements an independent Virtual Router Redundancy Protocol (VRRPv2; see rfc2338 for additional info) framework for director failover. . You need a kernel >= 2.4.28 or >= 2.6.11 for keepalived. See README.Debian for more information.

在 k8s-m1 配置 /etc/keepalived/keepalived.conf 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 $ vim /etc/keepalived/keepalived.conf vrrp_instance V1 { state MASTER interface eth1 virtual_router_id 61 priority 102 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 192.168.20.20 } }

在 k8s-m2 配置 /etc/keepalived/keepalived.conf 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 $ vim /etc/keepalived/keepalived.conf vrrp_instance V1 { state BACKUP interface eth1 virtual_router_id 61 priority 101 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 192.168.20.20 } }

在 k8s-m3 配置 /etc/keepalived/keepalived.conf 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 $ vim /etc/keepalived/keepalived.conf vrrp_instance V1 { state BACKUP interface eth1 virtual_router_id 61 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 192.168.20.20 } }

在配置前使用者需自行修改 Interface。

啟動 Keepalived。

1 2 $ systemctl enable keepalived $ systemctl start keepalived

檢視 Keepalived 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status keepalived ● keepalived.service - Keepalive Daemon (LVS and VRRP) Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 03:12:04 UTC; 17s ago Main PID: 39501 (keepalived) Tasks: 2 (limit: 2281) Memory: 2.4M CGroup: /system.slice/keepalived.service ├─39501 /usr/sbin/keepalived --dont-fork └─39512 /usr/sbin/keepalived --dont-fork Feb 04 03:12:04 k8s-m1 Keepalived[39501]: WARNING - keepalived was build for newer Linux 5.4.18, running on Linux 5.4.0-58-generic #64-Ubuntu SMP Wed Dec 9 08:16:25 UTC 2020 Feb 04 03:12:04 k8s-m1 Keepalived[39501]: Command line: '/usr/sbin/keepalived' '--dont-fork' Feb 04 03:12:04 k8s-m1 Keepalived[39501]: Opening file '/etc/keepalived/keepalived.conf'. Feb 04 03:12:05 k8s-m1 Keepalived[39501]: Starting VRRP child process, pid=39512 Feb 04 03:12:05 k8s-m1 Keepalived_vrrp[39512]: Registering Kernel netlink reflector Feb 04 03:12:05 k8s-m1 Keepalived_vrrp[39512]: Registering Kernel netlink command channel Feb 04 03:12:05 k8s-m1 Keepalived_vrrp[39512]: Opening file '/etc/keepalived/keepalived.conf'. Feb 04 03:12:05 k8s-m1 Keepalived_vrrp[39512]: Registering gratuitous ARP shared channel Feb 04 03:12:05 k8s-m1 Keepalived_vrrp[39512]: (V1) Entering BACKUP STATE (init) Feb 04 03:12:08 k8s-m1 Keepalived_vrrp[39512]: (V1) Entering MASTER STATE

確認網卡上 VIP 綁定狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether 08:00:27:14:86:db brd ff:ff:ff:ff:ff:ff inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0 valid_lft 60637sec preferred_lft 60637sec inet6 fe80::a00:27ff:fe14:86db/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether 08:00:27:4d:c8:d9 brd ff:ff:ff:ff:ff:ff inet 192.168.20.10/24 brd 192.168.20.255 scope global eth1 valid_lft forever preferred_lft forever inet 192.168.20.20/32 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:fe4d:c8d9/64 scope link valid_lft forever preferred_lft forever

由上述可以看到 VIP 已經被綁到 k8s-m1 的 eth1 Interface。

Etcd Cluster 在建立 Kubernetes Cluster 之前,我們需要在 k8s-m1, k8s-m2 與 k8s-m3 上構建成一個 Etcd Cluster,主要目的是儲存所有 Kubernetes 的狀態與元件的資訊,後續 Controller 會針對使用者預期 Kubernetes 上運作的服務與 Etcd 進行比對,來運行建立或刪除的修改行為。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

下載 Etcd 壓縮檔案。

1 $ wget https://github.com/etcd-io/etcd/releases/download/v3.3.8/etcd-v3.3.8-linux-amd64.tar.gz

這邊選擇使用 v3.3.8 版本,若使用較新版本在後續配置上會略有不同,讀者可以斟酌使用。

解壓縮 etcd-v3.3.8-linux-amd64.tar.gz 檔案。

1 $ tar -xvf etcd-v3.3.8-linux-amd64.tar.gz

進入 etcd-v3.3.8-linux-amd64 目錄,並將 etcd 與 etcdctl 檔案複製到 /usr/local/bin/ 目錄。

1 $ cp etcd-v3.3.8-linux-amd64/etcd etcd-v3.3.8-linux-amd64/etcdctl /usr/local/bin/

建立 /etc/systemd/system/etcd.service 啟動檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ vim /etc/systemd/system/etcd.service [Unit] Description=Etcd Server Documentation=https://github.com/coreos/etcd After=network.target [Service] User=root Type=notify ExecStart=etcd --config-file /etc/etcd/config.yml Restart=on-failure RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

在 k8s-m1 建立 /etc/etcd/config.yml 配置檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 $ vim /etc/etcd/config.yml name: k8s-m1 data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 10000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://0.0.0.0:2380' listen-client-urls: 'https://0.0.0.0:2379' max-snapshots: 5 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.20.10:2380' advertise-client-urls: 'https://192.168.20.10:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: "k8s-m1=https://192.168.20.10:2380,k8s-m2=https://192.168.20.11:2380,k8s-m3=https://192.168.20.12:2380" initial-cluster-token: 'k8s-etcd-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true peer-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-output: default force-new-cluster: false

在 k8s-m2 建立 /etc/etcd/config.yml 配置檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 $ vim /etc/etcd/config.yml name: k8s-m2 data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 10000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://0.0.0.0:2380' listen-client-urls: 'https://0.0.0.0:2379' max-snapshots: 5 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.20.11:2380' advertise-client-urls: 'https://192.168.20.11:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: "k8s-m1=https://192.168.20.10:2380,k8s-m2=https://192.168.20.11:2380,k8s-m3=https://192.168.20.12:2380" initial-cluster-token: 'k8s-etcd-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true peer-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-output: default force-new-cluster: false

在 k8s-m3 建立 /etc/etcd/config.yml 配置檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 $ vim /etc/etcd/config.yml name: k8s-m3 data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 10000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://0.0.0.0:2380' listen-client-urls: 'https://0.0.0.0:2379' max-snapshots: 5 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.20.12:2380' advertise-client-urls: 'https://192.168.20.12:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: "k8s-m1=https://192.168.20.10:2380,k8s-m2=https://192.168.20.11:2380,k8s-m3=https://192.168.20.12:2380" initial-cluster-token: 'k8s-etcd-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true peer-transport-security: ca-file: '/etc/etcd/ssl/etcd-ca.pem' cert-file: '/etc/etcd/ssl/etcd.pem' key-file: '/etc/etcd/ssl/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/etcd/ssl/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-output: default force-new-cluster: false

啟動 Etcd。

1 2 $ systemctl enable etcd $ systemctl start etcd

檢視 Etcd 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status etcd ● etcd.service - Etcd Server Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 03:41:37 UTC; 46s ago Docs: https://github.com/coreos/etcd Main PID: 39586 (etcd) Tasks: 7 (limit: 2281) Memory: 9.4M CGroup: /system.slice/etcd.service └─39586 /usr/local/bin/etcd --config-file /etc/etcd/config.yml Feb 04 03:41:37 k8s-m1 etcd[39586]: enabled capabilities for version 3.0 Feb 04 03:41:38 k8s-m1 etcd[39586]: health check for peer 77fc17e6efe1ad72 could not connect: dial tcp 192.168.20.12:2380: getsockopt: connection refused Feb 04 03:41:39 k8s-m1 etcd[39586]: peer 77fc17e6efe1ad72 became active Feb 04 03:41:39 k8s-m1 etcd[39586]: established a TCP streaming connection with peer 77fc17e6efe1ad72 (stream Message reader) Feb 04 03:41:39 k8s-m1 etcd[39586]: established a TCP streaming connection with peer 77fc17e6efe1ad72 (stream Message writer) Feb 04 03:41:39 k8s-m1 etcd[39586]: established a TCP streaming connection with peer 77fc17e6efe1ad72 (stream MsgApp v2 writer) Feb 04 03:41:39 k8s-m1 etcd[39586]: established a TCP streaming connection with peer 77fc17e6efe1ad72 (stream MsgApp v2 reader) Feb 04 03:41:41 k8s-m1 etcd[39586]: updating the cluster version from 3.0 to 3.3 Feb 04 03:41:41 k8s-m1 etcd[39586]: updated the cluster version from 3.0 to 3.3 Feb 04 03:41:41 k8s-m1 etcd[39586]: enabled capabilities for version 3.3

檢查 Etcd Cluster 每個節點的狀態。

1 2 3 4 5 6 7 8 $ etcdctl --endpoints https://127.0.0.1:2379 --ca-file=/etc/etcd/ssl/etcd-ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --debug cluster-health Cluster-Endpoints: https://127.0.0.1:2379 cURL Command: curl -X GET https://127.0.0.1:2379/v2/members member 77fc17e6efe1ad72 is healthy: got healthy result from https://192.168.20.12:2379 member 962eacc32c073e45 is healthy: got healthy result from https://192.168.20.11:2379 member be410bb048009f30 is healthy: got healthy result from https://192.168.20.10:2379 cluster is healthy

APIServer APIServer 負責提供各元件之間的溝通,像是 Scheduler 進行 Pod 的 filering 與 scoring,或使用者透過 Kubectl 指令對 Kubernetes 操作等等。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

在 k8s-m1 建立 /etc/systemd/system/apiserver.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 $ vim /etc/systemd/system/apiserver.service [Unit] Description=k8s API Server Documentation=https://github.com/coreos/etcd After=network.target [Service] User=root Type=notify ExecStart=kube-apiserver \ --v=0 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=5443 \ --insecure-port=0 \ --advertise-address=192.168.20.20 \ --service-cluster-ip-range=10.96.0.0/12 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.20.20:2381 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=front-proxy-client \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --disable-admission-plugins=PersistentVolumeLabel \ --enable-admission-plugins=NodeRestriction \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=128 \ --audit-log-path=/var/log/kubernetes/audit.log \ --audit-policy-file=/etc/kubernetes/audit/policy.yml \ --encryption-provider-config=/etc/kubernetes/encryption/config.yml \ --event-ttl=1h Restart=on-failure RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

advertise-address 需成 VIP

etcd-servers 需更為 2381 Port,讓呼叫 Etcd 過 LoadBalancer

在 k8s-m1 建立 /etc/kubernetes/encryption/config.yml 與 /etc/kubernetes/audit/policy.yml 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ mkdir -p /etc/kubernetes/encryption/ $ vim /etc/kubernetes/encryption/config.yml kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: 8a22dac954598020c9eeab1c368f7c82 - identity: {} $ mkdir -p /etc/kubernetes/audit $ vim /etc/kubernetes/audit/policy.yml apiVersion: audit.k8s.io/v1beta1 kind: Policy rules: - level: Metadata

secret 根據讀者需求自行更改。

在 k8s-m1 啟動 Kubernetes APIServer。

1 2 $ systemctl enable apiserver $ systemctl start apiserver

在 k8s-m1 檢查 Kubernetes APIserver 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status apiserver ● apiserver.service - k8s API Server Loaded: loaded (/etc/systemd/system/apiserver.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2021-02-06 01:54:06 UTC; 6s ago Docs: https://github.com/coreos/etcd Main PID: 58191 (kube-apiserver) Tasks: 6 (limit: 2281) Memory: 296.6M CGroup: /system.slice/apiserver.service └─58191 /usr/local/bin/kube-apiserver --v=0 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=5443 --insecure-port=0 --advertise-address=192.168.20.20 --service-cluster-ip-range=10.96.0.0/12 --service-node-port> Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.838406 58191 crd_finalizer.go:266] Starting CRDFinalizer Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: E0206 01:54:06.897990 58191 controller.go:152] Unable to remove old endpoints from kubernetes service: StorageError: key not found, Code: 1, Key: /registry/masterleases/192.168.20.20, ResourceVersion: 0, A> Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.930700 58191 cache.go:39] Caches are synced for autoregister controller Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.931472 58191 shared_informer.go:247] Caches are synced for cluster_authentication_trust_controller Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.934754 58191 cache.go:39] Caches are synced for APIServiceRegistrationController controller Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.939621 58191 cache.go:39] Caches are synced for AvailableConditionController controller Feb 06 01:54:06 k8s-m1 kube-apiserver[58191]: I0206 01:54:06.940080 58191 shared_informer.go:247] Caches are synced for crd-autoregister Feb 06 01:54:07 k8s-m1 kube-apiserver[58191]: I0206 01:54:07.827368 58191 controller.go:132] OpenAPI AggregationController: action for item : Nothing (removed from the queue). Feb 06 01:54:07 k8s-m1 kube-apiserver[58191]: I0206 01:54:07.828477 58191 controller.go:132] OpenAPI AggregationController: action for item k8s_internal_local_delegation_chain_0000000000: Nothing (removed from the queue). Feb 06 01:54:07 k8s-m1 kube-apiserver[58191]: I0206 01:54:07.843918 58191 storage_scheduling.go:143] all system priority classes are created successfully or already exist.

將 /etc/kubernetes/pki/apiserver.pem 複製到 k8s-m2 與 k8s-m3。

1 2 $ scp ${PKI_DIR}/apiserver.pem ${PKI_DIR}/apiserver-key.pem ${PKI_DIR}/front-proxy-ca.pem ${PKI_DIR}/front-proxy-client.pem ${PKI_DIR}/front-proxy-client-key.pem k8s-m2:${PKI_DIR}/ $ scp ${PKI_DIR}/apiserver.pem ${PKI_DIR}/apiserver-key.pem ${PKI_DIR}/front-proxy-ca.pem ${PKI_DIR}/front-proxy-client.pem ${PKI_DIR}/front-proxy-client-key.pem k8s-m3:${PKI_DIR}/

在 k8s-m2 建立 /etc/systemd/system/apiserver.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 $ vim /etc/systemd/system/apiserver.service [Unit] Description=k8s API Server Documentation=https://github.com/coreos/etcd After=network.target [Service] User=root Type=notify ExecStart=kube-apiserver \ --v=0 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=5443 \ --insecure-port=0 \ --advertise-address=192.168.20.20 \ --service-cluster-ip-range=10.96.0.0/12 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.20.20:2381 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=front-proxy-client \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --disable-admission-plugins=PersistentVolumeLabel \ --enable-admission-plugins=NodeRestriction \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=128 \ --audit-log-path=/var/log/kubernetes/audit.log \ --audit-policy-file=/etc/kubernetes/audit/policy.yml \ --encryption-provider-config=/etc/kubernetes/encryption/config.yml \ --event-ttl=1h Restart=on-failure RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

在 k8s-m2 建立 /etc/kubernetes/encryption/config.yml 與 /etc/kubernetes/audit/policy.yml 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ mkdir -p /etc/kubernetes/encryption/ $ vim /etc/kubernetes/encryption/config.yml kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: 8a22dac954598020c9eeab1c368f7c82 - identity: {} $ mkdir -p /etc/kubernetes/audit $ vim /etc/kubernetes/audit/policy.yml apiVersion: audit.k8s.io/v1beta1 kind: Policy rules: - level: Metadata

在 k8s-m2 啟動 Kubernetes APIServer。

1 2 $ systemctl enable apiserver $ systemctl start apiserver

在 k8s-m2 檢查 Kubernetes APIserver 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status apiserver ● apiserver.service - k8s API Server Loaded: loaded (/etc/systemd/system/apiserver.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2021-02-06 01:59:28 UTC; 22s ago Docs: https://github.com/coreos/etcd Main PID: 23441 (kube-apiserver) Tasks: 7 (limit: 2281) Memory: 301.2M CGroup: /system.slice/apiserver.service └─23441 /usr/local/bin/kube-apiserver --v=0 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.485105 23441 shared_informer.go:240] Waiting for caches to syn> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: E0206 01:59:28.614593 23441 controller.go:152] Unable to remove old endpoints> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.664503 23441 shared_informer.go:247] Caches are synced for clu> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.668346 23441 cache.go:39] Caches are synced for APIServiceRegi> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.675642 23441 cache.go:39] Caches are synced for AvailableCondi> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.676190 23441 cache.go:39] Caches are synced for autoregister c> Feb 06 01:59:28 k8s-m2 kube-apiserver[23441]: I0206 01:59:28.687991 23441 shared_informer.go:247] Caches are synced for crd> Feb 06 01:59:29 k8s-m2 kube-apiserver[23441]: I0206 01:59:29.456591 23441 controller.go:132] OpenAPI AggregationController:> Feb 06 01:59:29 k8s-m2 kube-apiserver[23441]: I0206 01:59:29.456849 23441 controller.go:132] OpenAPI AggregationController:> Feb 06 01:59:29 k8s-m2 kube-apiserver[23441]: I0206 01:59:29.474785 23441 storage_scheduling.go:143] all system priority cl>

在 k8s-m3 建立 /etc/systemd/system/apiserver.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 $ vim /etc/systemd/system/apiserver.service [Unit] Description=k8s API Server Documentation=https://github.com/coreos/etcd After=network.target [Service] User=root Type=notify ExecStart=kube-apiserver \ --v=0 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=5443 \ --insecure-port=0 \ --advertise-address=192.168.20.20 \ --service-cluster-ip-range=10.96.0.0/12 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.20.20:2381 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=front-proxy-client \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --disable-admission-plugins=PersistentVolumeLabel \ --enable-admission-plugins=NodeRestriction \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=128 \ --audit-log-path=/var/log/kubernetes/audit.log \ --audit-policy-file=/etc/kubernetes/audit/policy.yml \ --encryption-provider-config=/etc/kubernetes/encryption/config.yml \ --event-ttl=1h Restart=on-failure RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

在 k8s-m3 建立 /etc/kubernetes/encryption/config.yml 與 /etc/kubernetes/audit/policy.yml 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ mkdir -p /etc/kubernetes/encryption/ $ vim /etc/kubernetes/encryption/config.yml kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: 8a22dac954598020c9eeab1c368f7c82 - identity: {} $ mkdir -p /etc/kubernetes/audit $ vim /etc/kubernetes/audit/policy.yml apiVersion: audit.k8s.io/v1beta1 kind: Policy rules: - level: Metadata

在 k8s-m3 啟動 Kubernetes APIServer。

1 2 $ systemctl enable apiserver $ systemctl start apiserver

在 k8s-m3 檢查 Kubernetes APIserver 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status apiserver ● apiserver.service - k8s API Server Loaded: loaded (/etc/systemd/system/apiserver.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2021-02-06 02:01:07 UTC; 15s ago Docs: https://github.com/coreos/etcd Main PID: 11887 (kube-apiserver) Tasks: 7 (limit: 2281) Memory: 294.7M CGroup: /system.slice/apiserver.service └─11887 /usr/local/bin/kube-apiserver --v=0 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --s> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.599249 11887 shared_informer.go:240] Waiting for caches to sync> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.692457 11887 cache.go:39] Caches are synced for APIServiceRegis> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.698206 11887 cache.go:39] Caches are synced for AvailableCondit> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.698808 11887 shared_informer.go:247] Caches are synced for clus> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.698908 11887 cache.go:39] Caches are synced for autoregister co> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: I0206 02:01:07.719845 11887 shared_informer.go:247] Caches are synced for crd-> Feb 06 02:01:07 k8s-m3 kube-apiserver[11887]: E0206 02:01:07.720204 11887 controller.go:152] Unable to remove old endpoints > Feb 06 02:01:08 k8s-m3 kube-apiserver[11887]: I0206 02:01:08.583628 11887 controller.go:132] OpenAPI AggregationController: > Feb 06 02:01:08 k8s-m3 kube-apiserver[11887]: I0206 02:01:08.583926 11887 controller.go:132] OpenAPI AggregationController: > Feb 06 02:01:08 k8s-m3 kube-apiserver[11887]: I0206 02:01:08.611881 11887 storage_scheduling.go:143] all system priority cla>

Scheduler Scheduler 負責在每次建立 Pod 時,根據 filtering 階段篩選出符合需求的 Worker Node,接著在 scoring 階段針對每個符合的 worker node 進行評分,評分依據為節點當前狀態,若剩餘資源較少的節點則分數可能較低,最後評分較高者作為 Pod 建置的 Worker Node。若分數相同則使用亂數方式從中選擇其一作為最後建置的 Worker Node。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

在 k8s-m1 建立 /etc/systemd/system/scheduler.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 $ vim /etc/systemd/system/scheduler.service [Unit] Description=k8s Scheduler Server Documentation=https://github.com/coreos/etcd [Service] User=root ExecStart=kube-scheduler \ --v=0 \ --logtostderr=true \ --address=127.0.0.1 \ --leader-elect=true \ --kubeconfig=/etc/kubernetes/scheduler.conf Restart=on-failure RestartSec=10 [Install] WantedBy=multi-user.target

在 k8s-m1 啟動 Kubernetes Scheduler。

1 2 $ systemctl enable scheduler $ systemctl start scheduler

在 k8s-m1 檢視 Kubernetes Scheduler 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status scheduler ● scheduler.service - k8s Scheduler Server Loaded: loaded (/etc/systemd/system/scheduler.service; disabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 07:55:58 UTC; 6s ago Docs: https://github.com/coreos/etcd Main PID: 42594 (kube-scheduler) Tasks: 6 (limit: 2281) Memory: 12.7M CGroup: /system.slice/scheduler.service └─42594 /usr/local/bin/kube-scheduler --v=0 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernetes/scheduler.conf Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: W0204 07:55:59.367754 42594 authorization.go:156] No authorization-kubeconfig provided, so SubjectAccessReview of authorization tokens won't work. Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.378086 42594 registry.go:173] Registering SelectorSpread plugin Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.378268 42594 registry.go:173] Registering SelectorSpread plugin Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: W0204 07:55:59.379735 42594 authorization.go:47] Authorization is disabled Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: W0204 07:55:59.379837 42594 authentication.go:40] Authentication is disabled Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.379924 42594 deprecated_insecure_serving.go:51] Serving healthz insecurely on 127.0.0.1:10251 Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.381294 42594 secure_serving.go:197] Serving securely on [::]:10259 Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.382191 42594 tlsconfig.go:240] Starting DynamicServingCertificateController Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.482170 42594 leaderelection.go:243] attempting to acquire leader lease kube-system/kube-scheduler... Feb 04 07:55:59 k8s-m1 kube-scheduler[42594]: I0204 07:55:59.502458 42594 leaderelection.go:253] successfully acquired lease kube-system/kube-scheduler

在 k8s-m2 建立 /etc/systemd/system/scheduler.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 $ vim /etc/systemd/system/scheduler.service [Unit] Description=k8s Scheduler Server Documentation=https://github.com/coreos/etcd [Service] User=root ExecStart=kube-scheduler \ --v=0 \ --logtostderr=true \ --address=127.0.0.1 \ --leader-elect=true \ --kubeconfig=/etc/kubernetes/scheduler.conf Restart=on-failure RestartSec=10 [Install] WantedBy=multi-user.target

在 k8s-m2 啟動 Kubernetes Scheduler

1 2 $ systemctl enable scheduler $ systemctl start scheduler

在 k8s-m2 檢視 Kubernetes Scheduler 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status scheduler ● scheduler.service - k8s Scheduler Server Loaded: loaded (/etc/systemd/system/scheduler.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 07:56:40 UTC; 1min 31s ago Docs: https://github.com/coreos/etcd Main PID: 7418 (kube-scheduler) Tasks: 6 (limit: 2281) Memory: 12.1M CGroup: /system.slice/scheduler.service └─7418 /usr/local/bin/kube-scheduler --v=0 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig> Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: W0204 07:56:41.731915 7418 authentication.go:289] No authentication-kubeconfig > Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: W0204 07:56:41.732028 7418 authorization.go:156] No authorization-kubeconfig pr> Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.744388 7418 registry.go:173] Registering SelectorSpread plugin Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.744586 7418 registry.go:173] Registering SelectorSpread plugin Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: W0204 07:56:41.746459 7418 authorization.go:47] Authorization is disabled Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: W0204 07:56:41.746668 7418 authentication.go:40] Authentication is disabled Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.746766 7418 deprecated_insecure_serving.go:51] Serving healthz i> Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.749890 7418 secure_serving.go:197] Serving securely on [::]:10259 Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.750208 7418 tlsconfig.go:240] Starting DynamicServingCertificate> Feb 04 07:56:41 k8s-m2 kube-scheduler[7418]: I0204 07:56:41.850345 7418 leaderelection.go:243] attempting to acquire leader

在 k8s-m3 建立 /etc/systemd/system/scheduler.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 $ vim /etc/systemd/system/scheduler.service [Unit] Description=k8s Scheduler Server Documentation=https://github.com/coreos/etcd [Service] User=root ExecStart=kube-scheduler \ --v=0 \ --logtostderr=true \ --address=127.0.0.1 \ --leader-elect=true \ --kubeconfig=/etc/kubernetes/scheduler.conf Restart=on-failure RestartSec=10 [Install] WantedBy=multi-user.target

在 k8s-m3 啟動 Kubernetes Scheduler

1 2 $ systemctl enable scheduler $ systemctl start scheduler

在 k8s-m3 檢視 Kubernetes Scheduler 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status scheduler ● scheduler.service - k8s Scheduler Server Loaded: loaded (/etc/systemd/system/scheduler.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 07:59:24 UTC; 28s ago Docs: https://github.com/coreos/etcd Main PID: 17623 (kube-scheduler) Tasks: 6 (limit: 2281) Memory: 11.5M CGroup: /system.slice/scheduler.service └─17623 /usr/local/bin/kube-scheduler --v=0 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernet> Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: W0204 07:59:24.487163 17623 authentication.go:289] No authentication-kubeconfig provided in or> Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: W0204 07:59:24.487313 17623 authorization.go:156] No authorization-kubeconfig provided, so Sub> Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.500454 17623 registry.go:173] Registering SelectorSpread plugin Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.500674 17623 registry.go:173] Registering SelectorSpread plugin Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: W0204 07:59:24.502747 17623 authorization.go:47] Authorization is disabled Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: W0204 07:59:24.502931 17623 authentication.go:40] Authentication is disabled Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.503030 17623 deprecated_insecure_serving.go:51] Serving healthz insecurely on 1> Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.503870 17623 secure_serving.go:197] Serving securely on [::]:10259 Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.504056 17623 tlsconfig.go:240] Starting DynamicServingCertificateController Feb 04 07:59:24 k8s-m3 kube-scheduler[17623]: I0204 07:59:24.604511 17623 leaderelection.go:243] attempting to acquire leader lease kube-sy>

Controller Manager Controller Manager 負責將使用者預期的服務狀態與當前狀態盡可能達到一致。像是一個無窮迴圈不斷的將當前 Pod…等等的狀態與 Etcd 進行比較,若 Pod 數少於使用者配置,則新增到使用者預期的數量,反之也如此。

注意:在 k8s-m1, k8s-m2 與 k8s-m3 皆需安裝與啟動服務

在 k8s-m1 建立 /etc/systemd/system/controller.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 $ vim /etc/systemd/system/controller.service [Unit] Description=k8s Controller Server Documentation=https://github.com/coreos/etcd [Service] User=root ExecStart=kube-controller-manager \ --v=0 \ --logtostderr=true \ --address=127.0.0.1 \ --root-ca-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \ --service-account-private-key-file=/etc/kubernetes/pki/sa.key \ --kubeconfig=/etc/kubernetes/controller-manager.conf \ --leader-elect=true \ --use-service-account-credentials=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --pod-eviction-timeout=2m0s \ --controllers=*,bootstrapsigner,tokencleaner \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --node-cidr-mask-size=24 Restart=always RestartSec=10 [Install] WantedBy=multi-user.target

在 k8s-m1 啟動 Kubernetes Controller Manager。

1 2 $ systemctl enable controller $ systemctl start controller

在 k8s-m1 檢視 Kubernetes Controller Manager 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status controller ● controller.service - k8s Controller Server Loaded: loaded (/etc/systemd/system/controller.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 08:05:58 UTC; 49s ago Docs: https://github.com/coreos/etcd Main PID: 42654 (kube-controller) Tasks: 4 (limit: 2281) Memory: 33.3M CGroup: /system.slice/controller.service └─42654 /usr/local/bin/kube-controller-manager --v=0 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kubernetes/pki/ca.pem --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem --service-a> Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.085006 42654 disruption.go:339] Sending events to api server. Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.086305 42654 shared_informer.go:247] Caches are synced for deployment Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.143749 42654 shared_informer.go:247] Caches are synced for resource quota Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.148362 42654 shared_informer.go:247] Caches are synced for resource quota Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.185093 42654 shared_informer.go:247] Caches are synced for endpoint Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.187570 42654 shared_informer.go:247] Caches are synced for endpoint_slice_mirroring Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.196972 42654 shared_informer.go:240] Waiting for caches to sync for garbage collector Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.497394 42654 shared_informer.go:247] Caches are synced for garbage collector Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.534939 42654 shared_informer.go:247] Caches are synced for garbage collector Feb 04 08:06:17 k8s-m1 kube-controller-manager[42654]: I0204 08:06:17.534985 42654 garbagecollector.go:137] Garbage collector: all resource monitors have synced. Proceeding to collect garbage

在 k8s-m2 建立 /etc/systemd/system/controller.service 檔案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 $ vim /etc/systemd/system/controller.service [Unit] Description=k8s Controller Server Documentation=https://github.com/coreos/etcd [Service] User=root ExecStart=kube-controller-manager \ --v=0 \ --logtostderr=true \ --address=127.0.0.1 \ --root-ca-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \ --service-account-private-key-file=/etc/kubernetes/pki/sa.key \ --kubeconfig=/etc/kubernetes/controller-manager.conf \ --leader-elect=true \ --use-service-account-credentials=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --pod-eviction-timeout=2m0s \ --controllers=*,bootstrapsigner,tokencleaner \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --node-cidr-mask-size=24 Restart=always RestartSec=10 [Install] WantedBy=multi-user.target

在 k8s-m2 啟動 Kubernetes Controller Manager。

1 2 $ systemctl enable controller $ systemctl start controller

在 k8s-m2 檢視 Kubernetes Controller Manager 狀態。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ systemctl status controller ● controller.service - k8s Controller Server Loaded: loaded (/etc/systemd/system/controller.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-02-04 08:22:15 UTC; 20s ago Docs: https://github.com/coreos/etcd Main PID: 7552 (kube-controller) Tasks: 4 (limit: 2281) Memory: 18.5M CGroup: /system.slice/controller.service └─7552 /usr/local/bin/kube-controller-manager --v=0 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kub> Feb 04 08:22:15 k8s-m2 kube-controller-manager[7552]: Flag --address has been deprecated, see --bind-address instead. Feb 04 08:22:15 k8s-m2 kube-controller-manager[7552]: I0204 08:22:15.661447 7552 serving.go:331] Generated self-signed cert > Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: W0204 08:22:16.017686 7552 authentication.go:265] No authentication-ku> Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: W0204 08:22:16.017920 7552 authentication.go:289] No authentication-ku> Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: W0204 08:22:16.018021 7552 authorization.go:156] No authorization-kube> Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: I0204 08:22:16.018115 7552 controllermanager.go:175] Version: v1.19.7 Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: I0204 08:22:16.018860 7552 secure_serving.go:197] Serving securely on > Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: I0204 08:22:16.019302 7552 deprecated_insecure_serving.go:53] Serving > Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: I0204 08:22:16.019418 7552 leaderelection.go:243] attempting to acquir> Feb 04 08:22:16 k8s-m2 kube-controller-manager[7552]: I0204 08:22:16.019671 7552 tlsconfig.go:240] Starting DynamicServingCe>